These days web performance is one of the most important things everyone wants to optimize on their apps, and it's clear to everyone how dramatic the impact of a poorly optimized website is on business. Yet we as an industry completely fail in recognizing its complexity and widely misuse the most common tool to measure it — Google Lighthouse. If you’re one of those people thinking that good performance equals a good Lighthouse score, you’ve also fallen into this trap and this article is for you.

You may not believe me, but five years ago, when we were writing the first lines of code for Alokai, the topic of frontend performance was almost non-existent in the web-dev space.

At that time, JavaScript SPA frameworks were gaining huge traction. AngularJS and React were already well-established tools gaining in popularity every day. Evan You just released the second major version of Vue.js that only a few years later became one of the three most popular SPA frameworks in the world.

At that time almost no one cared how fast the websites built with those tools were. The world was simple and if you wanted to dig into frontend performance topics, you ended up on websites with retro designs made by old computer geeks. The mainstream didn’t care, but this was about to change very soon because of the little friends in our pockets.

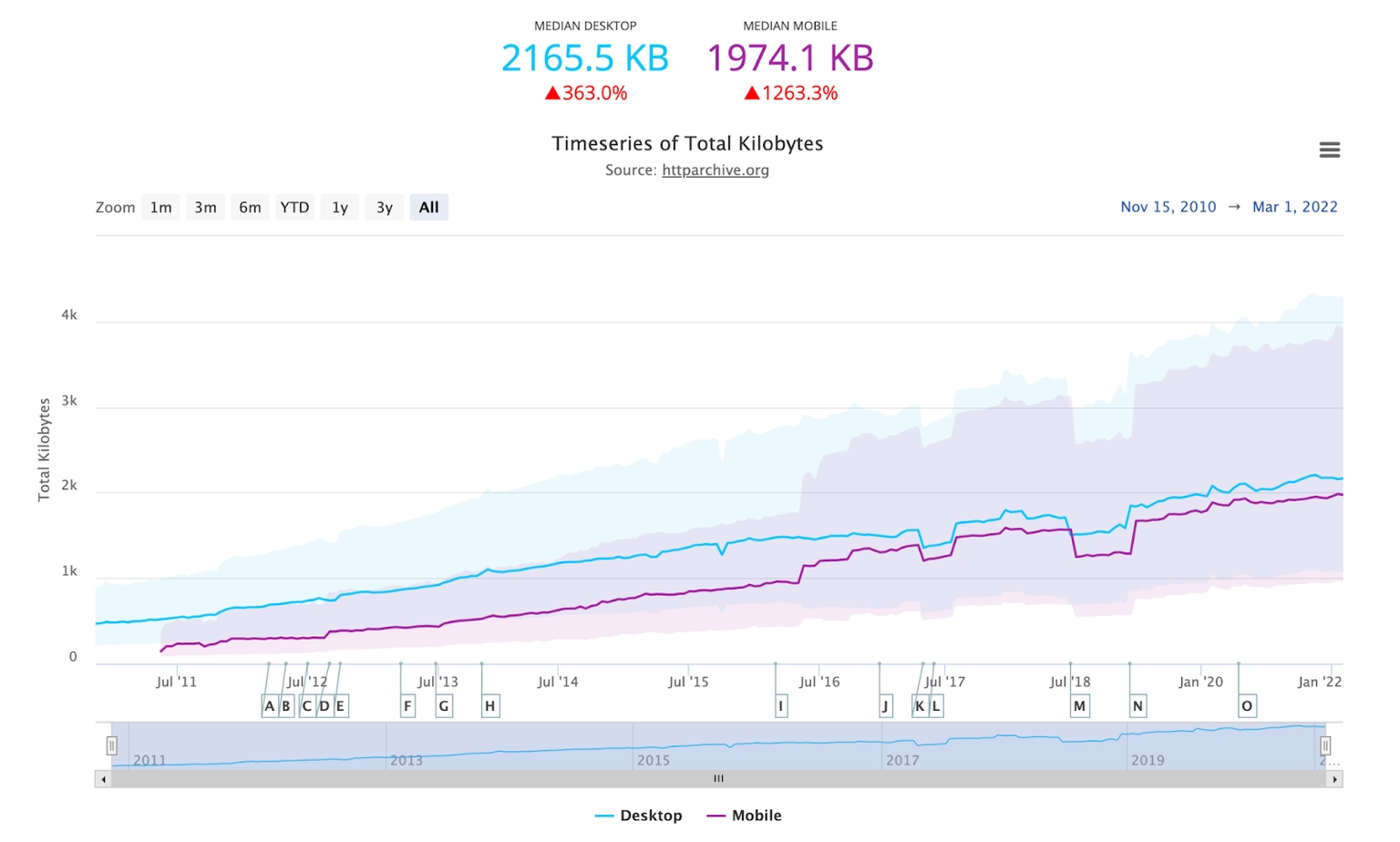

As long as we were using PCs and laptops as primary machines to consume the web no one seemed to be concerned with the growing size of websites. Both CPU and internet bandwidth were progressing faster than websites were growing their size.

This all changed when mobile started to become the preferred way of consuming the web. According to Google Research in 2017, it took on average 15.3 seconds to fully load a web page on a mobile phone. The impact of poor mobile performance on the business started to become clear to everyone, but we were still lacking an easy way to link those two components.

Everything changed when Google Lighthouse started gaining popularity. I remember when it was introduced and became rapidly adopted in the eCommerce space following Progressive Web Apps hype around 2018. Everyone was obsessed with PWAs, everyone was obsessed with Web Performance, and almost no one knew anything about both. Unfortunately, not much has changed since then.

What makes Lighthouse so widely adopted is its simplicity. You run a test, and get a number between 1 and 100 that tells you how good or bad the web performance of your website is. Everyone, even those without a technical background, can understand that.

The reality is not that simple, though, and web performance or user experience cannot be represented by a single number. In addition, there are a lot of nuances around how a Lighthouse audit works, and to use it as a reliable source of knowledge, you have to be aware of them. You won’t read about these things in the audit summary but don’t worry. In this article, I’ll dig into the most important ones.

Getting obsessed with one metric

Let’s start with a simple question. What does Google Lighthouse measure, and how can we use this information? I have a feeling that many people don’t try to answer it and just blindly assume that the score has to be high to be right.

As we can read on its website, the goal of Google Lighthouse is to measure “page quality”. The audit divides the quality into performance, accessibility, best practices, and SEO. All of those combined should give a good perspective on the quality of the website and, by that, try to accurately predict real end-user experience. “Try to predict” is accurate here because the audit will not give you any definitive answers about your users' experience. Some tools will, though, and I will talk about them in the end.

Google has always promoted the performance score as the most important one. In the heads of the general audience, “Lighthouse score” equals “performance score”, so “quality page” means “page with a high-performance score”.

Don’t get me wrong here. Performance is definitely a major factor influencing page quality and end-user experience, but the fact that we got so obsessed with just one of the four metrics gives the false impression that the only thing that matters to the end-user is performance, and if we get it right, our users will have a delightful experience. In reality, there are so many factors influencing good user experience that even all four metrics of Lighthouse can’t give you a definitive answer if it's good or bad.

Do you even know where this number comes from?

Making business decisions based on a raw number without broader context usually leads to bad decisions, but you can make even worse ones if you don’t even know where this number comes from.

Let me quickly explain how the Lighthouse score is calculated to make sure we are all on the same page.

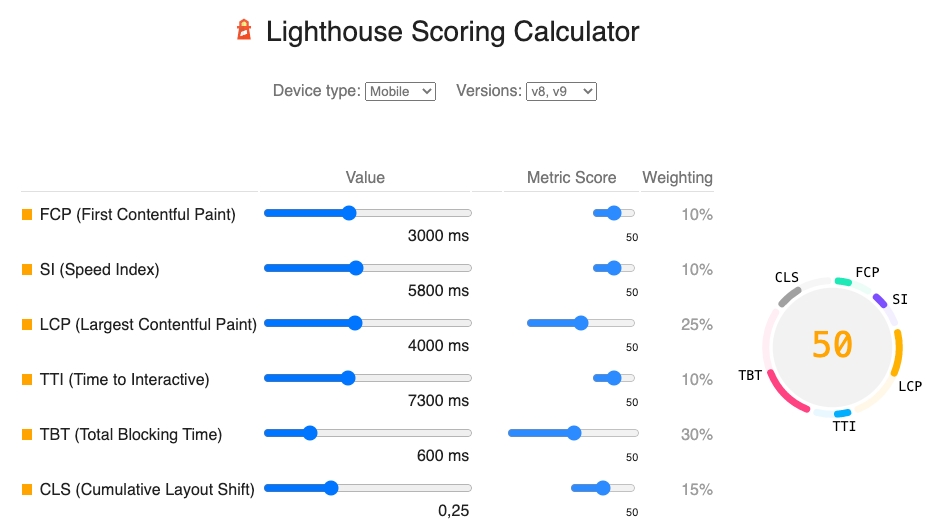

The Lighthouse score is calculated from a bunch of other metrics’ scores. Each of them has its own weight - some of them are more important, some of them less. You can check how individual metrics contribute to the score on the Lighthouse Scoring Calculator website.

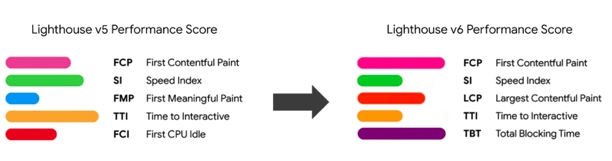

The algorithm changes with each version. This is nothing unusual - the more Google explores the impact of individual metrics on the end-user experience, the more accurate the weights are. This is why it makes no sense to compare your current Lighthouse score to the one from a year or two ago. Most likely, the scoring system has changed during that time. It could’ve improved or decreased because the algorithm changed, not because you changed something.

I’ve seen many people who made that mistake and were convinced that their website was slower than before (even though it wasn’t). When I asked them why they think it is, they showed me a one-year-old Lighthouse score and compared it to the most recent one.

The problem with local testing

Let’s dig deeper into understanding where the magical performance score comes from. We already know half of the truth behind the number, but we still don’t know about the second most important thing — the environment the test is performed in.

Most people use Chrome Devtools to run their Lighthouse audits, and this is probably the least reliable way of doing it. There are multiple external factors that influence the score. The download and rendering speed depends on your CPU and network. The developers will usually run the test on their shiny Macbooks with 5G internet and in most cases have better results than real users. In addition, your browser extensions are also treated as part of the website you’re auditing.

We can decrease the impact of these factors by running the test in Incognito mode, which will exclude Chrome extensions, and by applying CPU and network throttling, but we will still see different results on different devices.

Running Lighthouse locally is not reliable if you want to compare the results with anyone else and is definitely not reliable if you want to tell if the supposed end-user experience will be good or bad. Everyone in the company can run the benchmark, and everyone will have a different result. It’s hard to tell if there was an improvement or if the opposite happened after releasing the new version if the tests were performed in different environments.

You should always test your website in the same environment and limit the external factors that may influence the score as much as possible.

Using Page Speed Insights

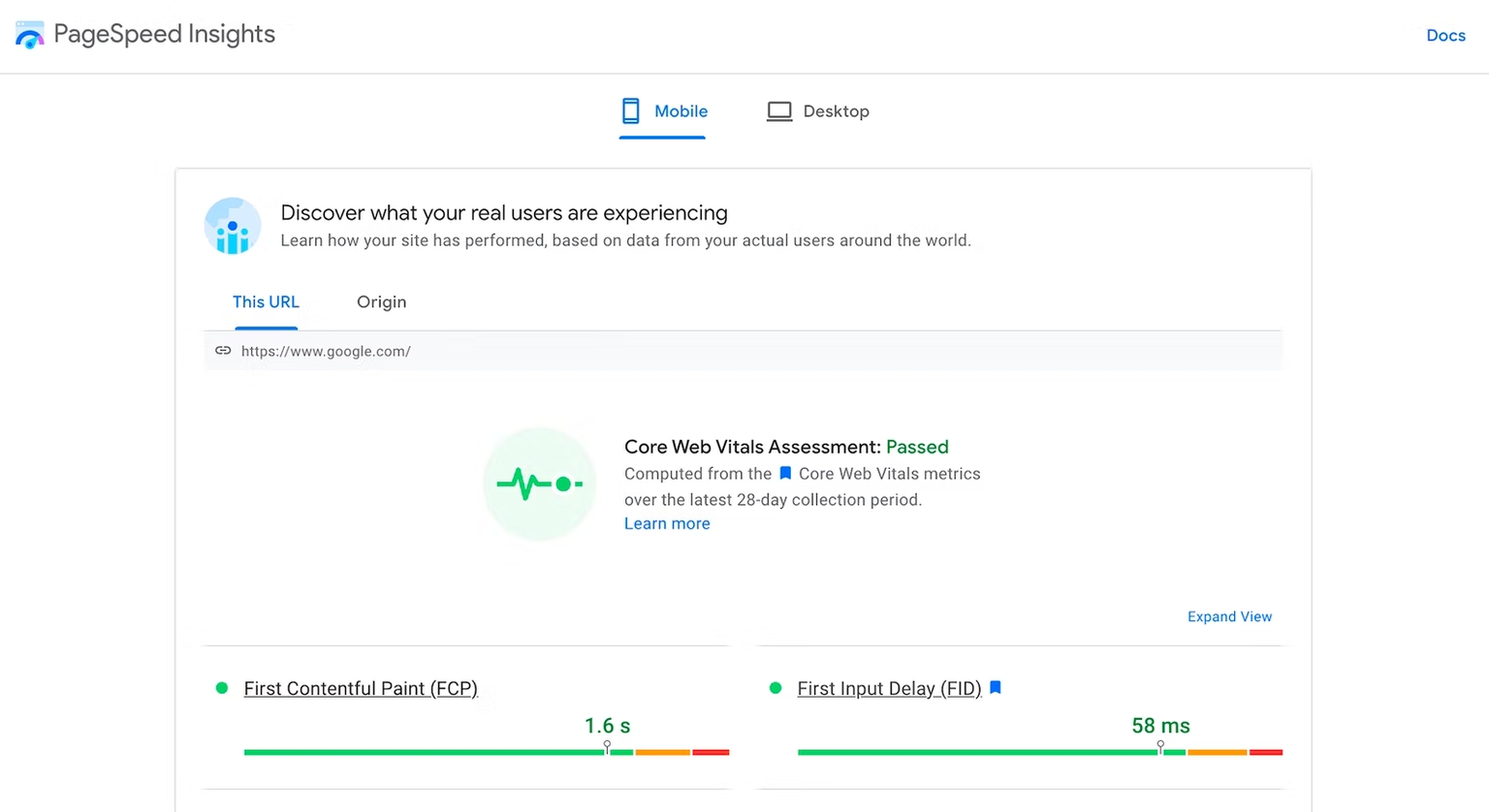

You can have more consistent results if you set up Lighthouse CI in an external environment to test your page or use tools like SpeedCurve, but if you need to quickly inspect a website, I suggest taking a look at Page Speed Insights.

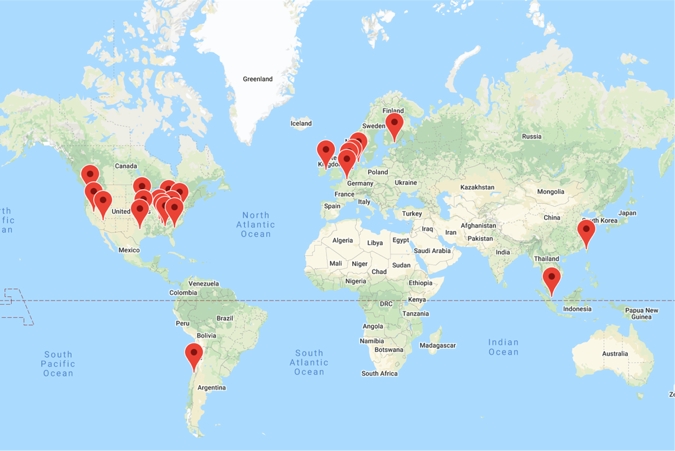

It’s a website made by Google itself, and you can use it to perform quick Lighthouse tests on any website using the closest unoccupied Google Data Center instead of your local machine.

Page Speed Insights usually uses the data center that is the closest one to your location, but sometimes it could use another one if the closest is under heavy load. This is why you could sometimes get slightly different results on the same page in tests made one after another but it’s still much better than using your own laptop.

Even though the PSI score will be more consistent, it’s still far from being an accurate measurement of real user experience. Page Speed Insights will run a mobile Lighthouse test on emulated Moto G4, which is a typical mid-range smartphone. Unless all of your users have it, they’re gonna experience the website differently.

Lighthouses can be easily tricked

Another problem with Lighthouse is the fact that it is just an algorithm. It gets input, processes the data, and gives output. If we know how it works, we can easily cheat it, and that happens more often than you think!

You can easily find a lot of articles like this one showing how to build a website that is getting a perfect Lighthouse score in a particular category while delivering a horrible experience.

It’s equally easy to trick the performance score. You can detect the Lighthouse user agent and serve a different version of your website for auditing tools.

In fact, there are companies out there doing exactly that! If the score is suspiciously high compared to the website's complexity and real loading experience, there is a high chance someone is trying to trick you. We’ve seen that not that long ago in the eCommerce space where one of the PWA frontend solutions - ScandiPWA tried to cheat their clients with a fake Lighthouse score.

As a side note, things like that wouldn’t happen if all of us didn’t so blindly believe that this score is so important.

So when does it make sense to use Lighthouse?

By reading all of that you can get the impression that in my opinion, Lighthouse is useless. This is definitely not my point here! I think it’s a wonderful tool and the fact that Google is trying to find an easy way to help developers identify potential performance bottlenecks impacting user experience is worth supporting. The problem is not in the tool itself but in the way how we use it or, to be more precise, misuse it.

I have no doubts that Google Lighthouse has contributed to a faster web more than any other tool before, but the name “Lighthouse” has its purpose. Its goal is to guide on improving the page quality, not give definitive answers if it's good or bad. I’ve seen websites with a great user experience and low Lighthouse scores and vice versa. Good performance is a tradeoff. You always have to sacrifice something to make it better. Sometimes it’s an analytics script, sometimes it's a feature. It’s not always a good business decision to get rid of some of them to have better performance.

To me, Lighthouse shines the most when we want to quickly compare different versions of our website to see if there was an improvement or decrease in its performance. It’s definitely worth implementing Lighthouse checks in your CI/CD pipelines with Lighthouse CI.

You should also audit websites with similar complexity from your industry to get a sense of the realistic score in your case that you should be aiming for. An eCommerce website rarely scores above 60 while a blog often hits 100. It’s important to know what is a “good” score in your case.

Measuring real user performance

Lighthouse is not a good tool to measure actual user experiences. Synthetic data will never tell you anything about that. To understand how users are experiencing your website, you have to get the data from… your users.

You don’t have to set up any additional monitoring tools to check that! If you audit your page on Page Speed Insights at the very top you will see how it is scoring against the four most important performance metrics (and that will also affect your SEO results).

This data is collected from the past 30 days on devices using Chrome among real users of your website so keep that in mind when comparing the score before and after the update. The only requirement for collecting this data is to make your website crawlable.

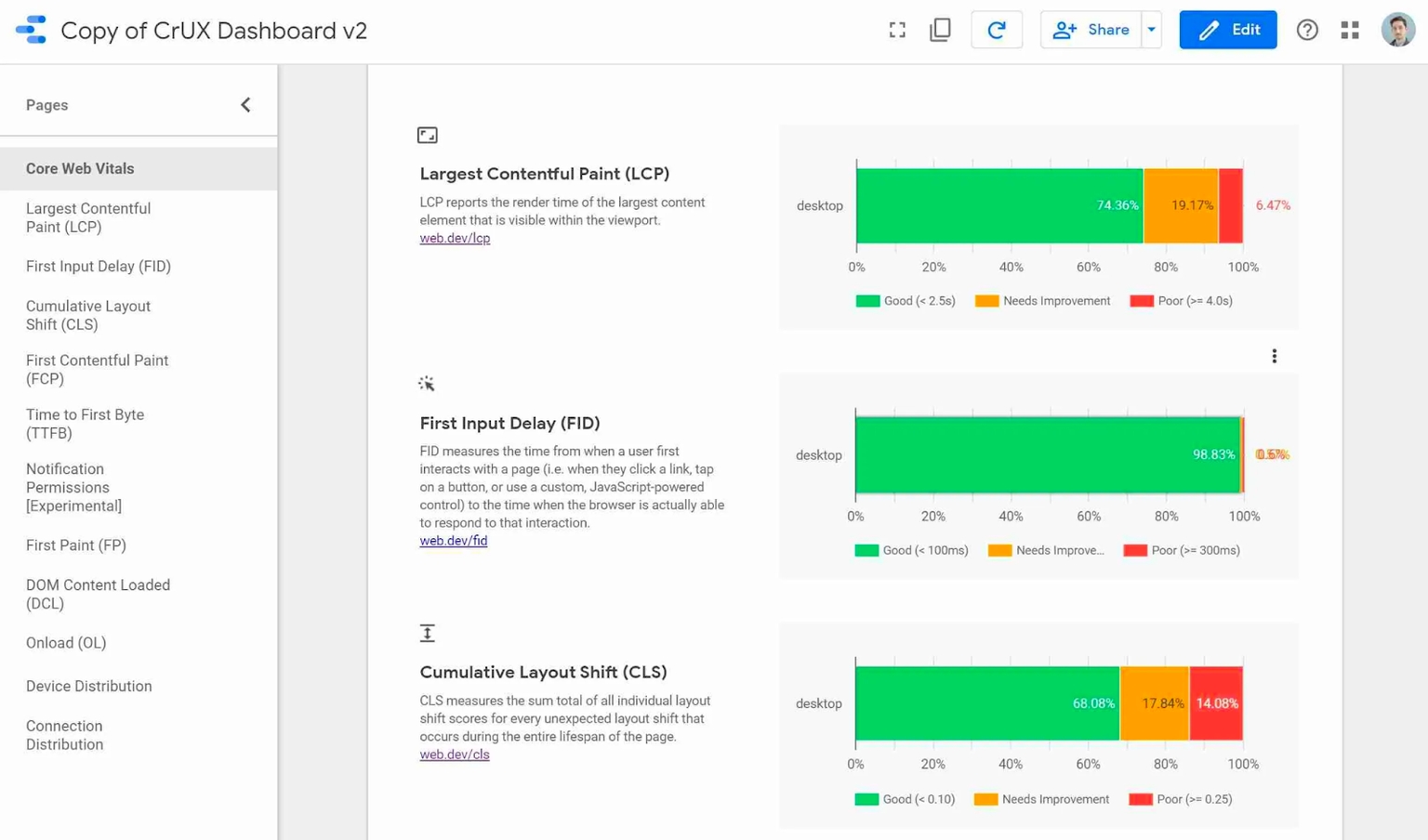

This real-world data comes from a Chrome User Experience Report (CrUX) which collects performance metrics from real user devices using Google Chrome (by default it’s turned on on every Chrome browser). You can easily get access to the history of your metric in CrUX Dashboard in Google Data Studio.

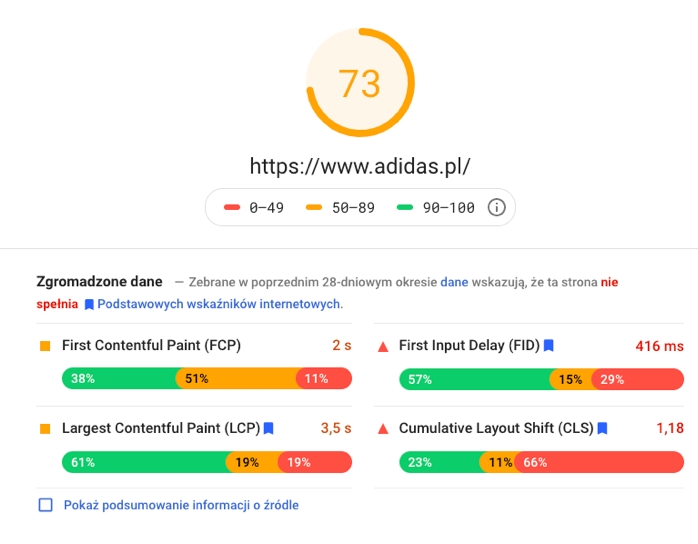

If you do a PSI benchmark on a few websites, you will quickly notice that in many cases, there is no relation between a Lighthouse score and performance data from real users. For example, below, you can find a screenshot from an audit of adidas.pl (performed some time ago). Even though the Lighthouse score is rather high, the real-world performance is terrible.

This is why you shouldn’t blindly optimize the Lighthouse score and always look for real user data.

Summary

Lighthouse is a great tool if it is used in the right way. You can (and should) use it to get a general sense of the performance of your website. No other tool will give you so much ready-to-process information with just a few clicks.

You just need to be aware of where this data comes from and what exactly it represents so you can make conscious decisions based on it. Not everything that Lighthouse will identify as a bottleneck is an actual bottleneck and it’s not always worth optimizing your performance at all costs.

Lighthouse is best at identifying if there was an improvement or decrease in the performance of a specific website, but the actual score could have nothing to do with actual performance experienced by real-world users. To learn about this, you need to collect real user data.